Having heavy traffic on your site or application is usually a good thing. Users are enjoying your product, your company is getting conversions, and everything seems swell. Until you begin to see your servers stressing out as they start to bear an ever-increasing load. It could be a malicious attack, a bug in the system, or just a user trying to do too much. In any case, you should have safeguards in place to stop serious events like this from happening, and that’s usually from rate limiting.

What Is Rate Limiting?

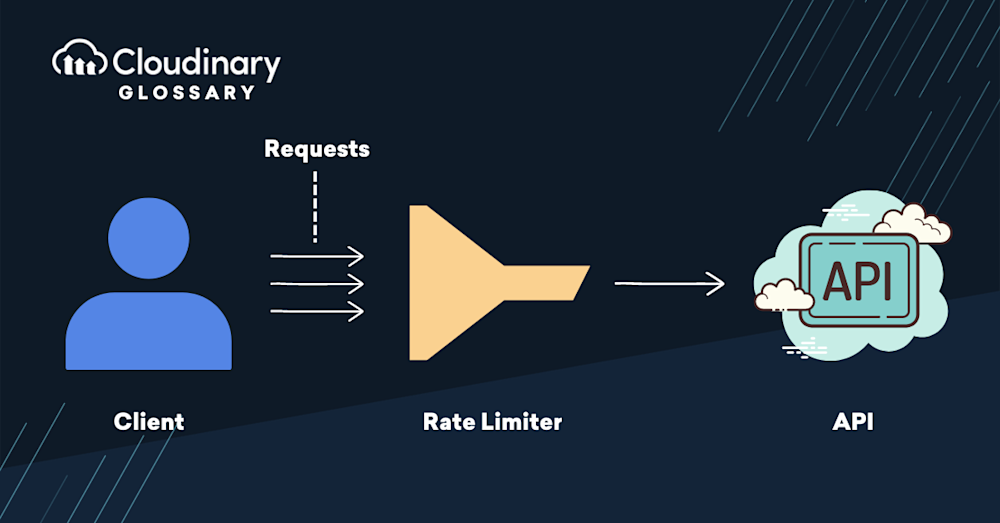

Rate limiting is a method of restricting network traffic on an application. In most cases, it’s effectively a way to stop individual users from repeating certain actions too many times in a timeframe. Activities like posting messages on a forum, logging in to an account or using an API to send/receive data can all be abused when put in the wrong hands, which is why rate limiting has become an effective tool.

Why Would I Need Rate Limiting?

As we said before, the intention of rate limiting is to stop users from repeating actions across the network. Without limits in place, malicious attackers can abuse your system or overload it with constant requests until it can’t handle it anymore. It also helps prevent users from doing too many actions at once, like making too many social media posts at the same time.

Rate limiting is widely used in various contexts. For instance, platforms like Discord implement rate limiting to restrict users’ activities if they repeat any action too frequently. Similarly, ISPs may enforce rate limits based on service agreements, ensuring customers only use the bandwidth they have paid for.

How Can I Use Rate Limiting?

You can use rate limiting in a number of ways, such as:

- Preventing Excessive Login Attempts. If you have a website that allows users to sign up for an account and log in with their email addresses, you might want to limit how often each user can attempt to log in with their credentials. This will help prevent bots from signing up for accounts or trying to brute force their way into your system.

- Limiting Specific IP Addresses. If someone is testing your site’s defenses (or is just acting strange), you could set up a rule that prevents them from accessing your site with that IP address (or force them to access your site through a VPN).

- Preventing Potential Exploits. Bots or malicious attackers trying to exploit any bugs in the code of your website or server can be shut down with rate limiting. These attacks may be directed at specific pages or functionality and can range from benign attempts at discovering security flaws (that could then be reported back) to malicious attempts at stealing data.

- Preventing DDoS Attacks. Bots trying to flood your site with requests and/or resources (known as a DDoS attack). This can be done in an attempt to take down the entire site or just as a way of testing it for vulnerabilities.

- Use Rate-Limiting Algorithms. Rate limiting can be implemented using various algorithms, such as a fixed window algorithm, which limits the number of requests a user can make in a specific time window (e.g., 100 requests per minute). This technical approach to rate limiting allows for precise control over traffic flow.

It’s important to monitor and limit the number of requests per second to your application, especially if it has high traffic. Rate limiting helps you enforce a maximum number of requests per second (RPS).

While rate limiting is essential for managing traffic, it can impact system performance more than throttling due to the additional processing required to track the traffic on a per-second basis. This consideration is vital in designing a rate limiting strategy that balances traffic control and system efficiency.

How Does Rate Limiting Work for APIs?

Rate limiting is a way to control how often a user can make requests to your API. Rate limiting can be set up to allow multiple requests per second, minute, hour, or day. For example:

- A user might be able to make five RPS each minute

- A user might be able to make 1,000 requests per minute

- A user might only be allowed ten requests every hour

Most public and premium APIs are rate limited by default to prevent abuse. For example, Cloudinary’s free tier allows 500 requests per hour – enough for a small project to work without issue but not enough to warrant potential misuse.

Strategies such as spreading requests over time, caching API calls for a few seconds, and minimizing repetitive API calls can be employed to avoid hitting rate limits. These approaches help ensure continuous access to the resource without triggering the rate limiting mechanism.

What Is the Difference between Bot Management and Rate Limiting?

Rate limiting and bot management are two different things, but they both help you protect your site from abuse.

Rate limiting is a way to protect your site from people trying to do too much. If someone tries too many requests in a short period, the system will block them from making any further requests until a set time has passed.

Bot management is more about stopping bots from accessing your site altogether. It’s not just about limiting how often they can access it or what pages they can visit; rather, it blocks all requests from bots altogether.

Additional Resources You May Find Useful: